ℹ️ You’re reading Quartz Essentials: quick, engaging outlines of the most important topics affecting the global economy.

Quantum computing

A crash course in the future of computing.

- 1 of 9

Seeking supremacy

Image copyright: AP Photo/Seth WenigQuantum computers are as weird as anything found in a sci-fi novel, using the uncertainty at the heart of quantum physics to make certain calculations. And like a quantum superposition, the nascent industry is in an in-between state as well. The technology works, but not well enough to supplant classical, binary computing, despite its promise to be vastly superior for massive number crunching.

Companies like IBM and Google are working feverishly to hit what researchers call “quantum advantage,” which could have world-changing implications for fields like cryptography and artificial intelligence (but probably not laptop-changing implications, sorry). To do that, they’ll have to conquer the fundamental physical challenges of the quantum world.

It’s an inherently messy technology that tests the smartest minds in computer science. Let’s try to reduce the uncertainty about it.

- 2 of 9

Brief history

1980: Yuri Manin “first [puts] forward the notion, albeit in a rather vague form.” Paul Benioff creates the first theoretical model of a quantum computer.

1981: Richard Feynman proposes that a quantum universe can only be simulated on a quantum computer.

1985: David Deutsch shows that Turing’s “universal computer” would not be as universal as a quantum computer.

1994: Peter Shor develops an algorithm for factoring large prime numbers on a quantum computer.

1998: Three researchers build the first quantum computer, a 2-qubit machine that only works for “a few nanoseconds.”

2001: Shor’s algorithm is used to factor 15 into 5 and 3 using a 7-qubit computer.

2011: The D-Wave, arguably the first commercial quantum computer, goes on sale.

2013: The University of Bristol in the UK puts a 2-qubit quantum computer online for anyone to use.

- 3 of 9

Explain it like I’m 5!

Image copyright: Google/Handout via ReutersTraditional computers consist of billions of transistors, microscopic devices that can be turned on or off, which translates into a one or a zero.

Quantum computers exploit what’s called “quantum superposition.” At a subatomic scale, particles have the ability to exist in more than one state at a time, akin to a spinning coin. More states means more calculations. The power of a supercomputer scales geometrically with the number of qubits it has, so they get very powerful, very fast.

When you actually measure the bit (or qubit, as it’s called in a quantum computer), it can only give one result, like a coin, a dead or alive cat, or a regular computer bit, but scientists have developed mathematical tricks to extract a bit more information than that would suggest. Combined with the greater speed at which quantum computers can do calculations, this means they can theoretically solve much harder problems than classical computers.

- 4 of 9

Quotable

“The one part of Microsoft where they put up slides that I truly do not understand.”

- 5 of 9

Origin story

Image copyright: AP Photo/Seth WenigWhen the world’s tiny band of quantum computer scientists set out to make theory a reality in the 1990s, they realized that we’d been using quantum computers, kind of, for awhile. Nuclear magnetic resonance technology, the secret sauce behind MRI (magnetic resonance imaging) machines, dates back to the 1940s. Magnetic waves cause the spin of protons in the body to align; when the waves stop, the protons go back to their resting state, releasing energy that carries information about what surrounds the protons.

An MRI uses that information to generate an image; in 1998, researchers used atoms of hydrogen and chlorine suspended in chlorophyll as qubits and used them to sort a list. “NMR had actually been ahead of other fields for decades. They had done logic gates back in the ’70s. They just didn’t know what they were doing and didn’t call it logic gates,” Jonathan Jones, one of the researchers, told Gizmodo.

- 6 of 9

By the digits

$10 million: Price of the D-Wave One quantum computer in 2011

$10,000: Price of a qubit in 2019

-273°C (-459°F): Temperature that the quantum chip in IBM’s Zurich lab is maintained at

17 million: Experiments run on IBM’s Q computer in its first 11 months open to the public

30 miles (50 km): Longest distance at which “quantum memories” have been linked through entanglement

15 millikelvins: Temperature Google’s Sycamore quantum chip is maintained at, which is about 200 times colder than deep space

- 7 of 9

Million-dollar question: What will quantum computers do?

They could theoretically solve some very knotty problems—like how to predict the weather, break encryption schemes, or make artificial intelligences much more intelligent. But we’re still a long way away. Two elementary goals are “quantum supremacy” and “quantum advantage.”

Quantum supremacy: This means that a quantum computer can perform a calculation that a classical computer cannot. In 2019, Google claimed its 54-qubit Sycamore processor solved a problem in 200 seconds that would take a supercomputer 10,000 years to crack. IBM scientists, however, claim that Google assumed too little disk space, and a proper supercomputer setup could solve it in 2.5 days at most.

Quantum advantage: That’s when quantum computers are demonstrably better at real, useful tasks we currently use classical computers for. IBM research director Dario Gil told an audience at CES 2020 that we’ll hit it this decade, and part of what we’re waiting for is skilled programmers to start using quantum computers.

- 8 of 9

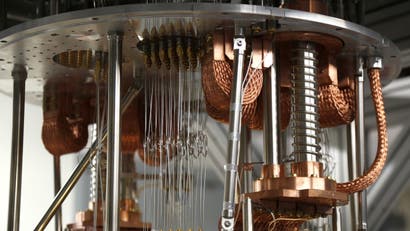

Watch this!

Chill out to the calming sounds of an attractively steampunk quantum computer at IBM’s Q Labs, perhaps to cool down after watching Quartz’s quantum-computing explainer.

- 9 of 9

This one weird trick!

A qubit might be represented by the spin of an electron or nucleus; keeping track of those is a finicky process that can be interrupted by literally the slightest change in temperature or movement, or magnetic waves from surrounding electronics. (That’s why the qubits are kept near absolute zero, to make them as stable as possible.)

Quantum computers also need error correction. In a classical computer, any time a bit is generated, it’s triplicated: 1, for instance, becomes 111. If that bit gets corrupted, you can use “majority rule” to fix the corrupted bit. If the 1 becomes a 0, the two copied 1s “outvote” it.

Mathematician Peter Shor proved that quantum error-correcting codes are possible back in 1995. But for such codes, “the theoretical minimum is five error-correcting qubits for every qubit devoted to computation”; with mere dozens of qubits available on the most powerful quantum computers, that’s a lot of resources.

Popular stories

TAKE IT BACKThe most offensive curse word in English has powerful feminist originsQuartz • August 5, 2017

TAKE IT BACKThe most offensive curse word in English has powerful feminist originsQuartz • August 5, 2017 SUCK IT UPThe world’s first “negative emissions” plant has begun operation—turning carbon dioxide into stoneQuartz • October 12, 2017

SUCK IT UPThe world’s first “negative emissions” plant has begun operation—turning carbon dioxide into stoneQuartz • October 12, 2017 BRAIN PRANKScientists studying psychoactive drugs accidentally proved the self is an illusionQuartz • February 9, 2018

BRAIN PRANKScientists studying psychoactive drugs accidentally proved the self is an illusionQuartz • February 9, 2018 STEAM STEAM BABYEurope’s heatwave is forcing nuclear power plants to shut downQuartz • August 6, 2018

STEAM STEAM BABYEurope’s heatwave is forcing nuclear power plants to shut downQuartz • August 6, 2018

LIGHTWEIGHT LIFE HACKWhy every cyclist needs a pool noodleQuartz • May 17, 2019

LIGHTWEIGHT LIFE HACKWhy every cyclist needs a pool noodleQuartz • May 17, 2019 WINDOW TO THE BRAINScientists may have found a better way to spot early signs of dementia: our eyesQuartz • July 11, 2019

WINDOW TO THE BRAINScientists may have found a better way to spot early signs of dementia: our eyesQuartz • July 11, 2019 JOINTS ON A ROLLMore Americans are smoking marijuana than tobacco cigarettes nowQuartz • August 29, 2022

JOINTS ON A ROLLMore Americans are smoking marijuana than tobacco cigarettes nowQuartz • August 29, 2022 Grown up techIt’s time for companies to digitally grow up. Here’s howQuartz at Work • January 31, 2023

Grown up techIt’s time for companies to digitally grow up. Here’s howQuartz at Work • January 31, 2023