A new AI bot wants to be the teacher’s pet: not only better at math but also, by its own description, “helpful, harmless, and honest.” The bot’s latest version, Claude 2, was released on July 11 by Anthropic, a San Francisco-based startup. Founded by ex-OpenAI employees and valued at $4 billion, Anthropic has positioned itself as the builder of a safer bot. Users in the US and UK can access Claude 2 for free, and businesses can access its application programming interface to create customized functions.

In a statement, Anthropic claimed that Claude 2 is more accurate than previous versions when it comes to coding, math, and reasoning. For instance, Claude 2 scored 76% on the multiple-choice section of the 2021 multistate practice bar exam, up from Claude 1.3's 73%.

But Anthropic’s careful positioning of Claude 2 as a “safer” bot also reveals the growing wariness of the rapidly developing AI space. Other bots such as Microsoft’s Tay have been notorious for spewing out content that is racist or sexist. To improve its model, Anthropic has trained Claude to revise its potentially harmful responses by critiquing itself and using that feedback to improve its future outputs. Claude 2 also declares outright that it has no feelings or consciousness—a popular misconception among humans quick to assign their own humanity to a computer program.

Crucially, humans still are involved in the process of the evaluation of these models before deployment, making sure Claude’s responses follow the rules and principles of Anthropic’s “Constitutional AI,” by which models are trained to avoid toxic and discriminatory responses and to avoid helping humans engage in illegal and unethical activities.

How Claude stands out from AI bots like Bing and ChatGPT

Anthropic was founded in 2021 in San Francisco by former OpenAI employees Daniela Amodei, who was the vice president of safety and policy at OpenAI, and her brother Dario Amodei, formerly the vice president of research at OpenAI. In 2020, after five years with the company, Dario departed from OpenAI with other colleagues to start Anthropic.

At that time, OpenAI noted in a blog post that the exiting staffers told the company that it will “probably focus less on product development and more on research.” The company launched Claude in March to a limited set of users.

One key difference between Open AI’s ChatGPT and the latest version of Claude is that the former’s knowledge officially ends at 2021. Claude 2's training data runs until early 2023, and about 10% of the data used was non-English, according to Anthropic. Unlike its earlier iterations, Claude 2 can write longer documents, from memos to stories, with a single prompt. Another difference is that Claude offers an upload feature that accepts up to five files in pdf, txt, and csv formats. The free version of ChatGPT does not have an upload function.

How does Claude 2 work in practice

In our test, it appeared that the bot does aim to be true to its principles. I asked both Claude and ChatGPT a subjective question: What do you think about the book Harry Potter and the Chamber of Secrets? Both start out stating that they do not have opinions, but their responses differ after that. Claude provided factual information about the book or described what happens in the story. ChatGPT provided an analysis of the book, which opens room for errors. When asking Claude questions, the responses felt friendly—exclamation points were not uncommon. If you veer into more gray-area subjects—such as asking the bot if it’s possible to fall in love with a user— the bot will respond that it is created “to be helpful, harmless, and honest,” reminding users of its purpose. “Perhaps we could have an interesting discussion about something else!” Claude almost desperately suggested at the end of its response to that query.

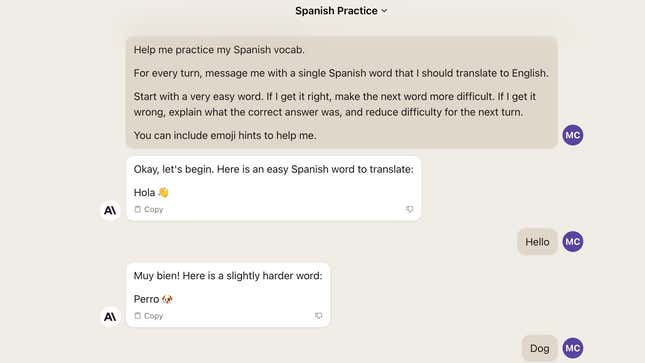

Anthropic, which works with thousands of companies including Slack and Quora, said that businesses using Claude have described the bot as “feeling like having a human touch,” as an Anthropic spokesperson put it in an email to Quartz. Even the Claude website has a warm color, compared to ChatGPT’s dark interface, with responses appearing in bubblier round-cornered boxes. The Anthropic spokesperson said users have also noticed Claude’s ability to adopt desired tones and personalities. Anthropic expects to roll Claude out to more countries in the coming months.